Hydropower Milestone and Nuclear Revival: Is Pink Hydrogen Next?

The Week That Was: September 21-27

NOTE: “The Week That Was” is a recap of ideas shared over the last seven days.

Spare Parts: What Caught My Eye This Week

Site C, one of Canada's largest hydroelectric projects, is nearing completion in British Columbia

After nearly $16 billion in investment (originally estimated at $6.6 billion) and 9 years of construction, the project will soon bring 1,100 megawatts (MW) of capacity to BC's grid, generating about 5,100 gigawatt hours (GWh) annually—enough to power approximately 450,000 homes in BC.

It’s easy to forget Canada’s rich hydro endowment. And there is still significant untapped hydropower potential, with estimates suggesting capacity could potentially be doubled.

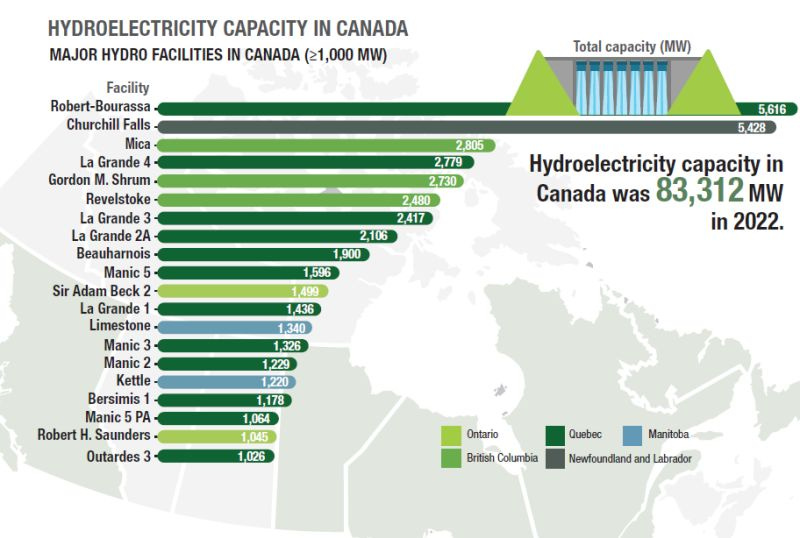

A few numbers for context:

• Total hydro capacity in Canada exceeds 83 GW. For comparison, the US nuclear fleet is about 97 GW (while US hydro capacity is around 102 GW).

• Site C will be the 21st hydro facility in Canada with capacity of over 1 GW.

• ~60% of Canada’s electricity generation comes from hydro, with provinces like Newfoundland, Manitoba, Quebec, BC, and Yukon sourcing more than 85% of their power from hydro.

Critics point to the community and environmental impact of these projects. For example, Site C will flood 5,550 hectares (13,714 acres) of land, creating an 83-km long reservoir, raising concerns about wildlife, fish habitats, and local ecosystems.

What do you think? Can large-scale hydro projects still play a leading role in the future of North American energy? How much of Canada's remaining hydro potential will get developed?

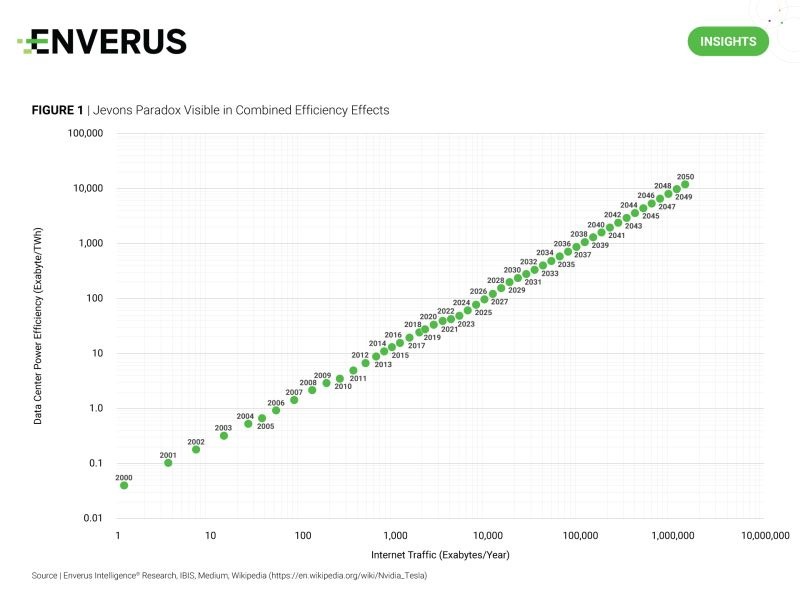

Its simplicity might make it seem like we’ve just extrapolated history, but that’s far from the case. We’ve deeply analyzed the core fundamentals of data center energy demand and developed a forecast that aligns with historical trends, based on these insights:

The technology learning curves at both the chip and facility levels in data centers are a counterforce to the surge in compute demand driven by AI.

For the energy demand curve to shift bullishly, the growth in compute demand must outpace these learning curves. Yet, there’s a feedback loop here—more demand drives more cycles, which in turn accelerates learning.

Conversely, if the learning curves outpace compute demand we have a bearish energy demand scenario. But again, this feedback loop plays a role—fewer cycles lead to less learning.

Of course, real-world constraints also come into play. Building 100+ acre facilities with multiple high-voltage connections and other significant requirements isn’t easy. Even if the demand exists, we still need to build the infrastructure. So the key question becomes: how many data centers can we realistically build, and how quickly?

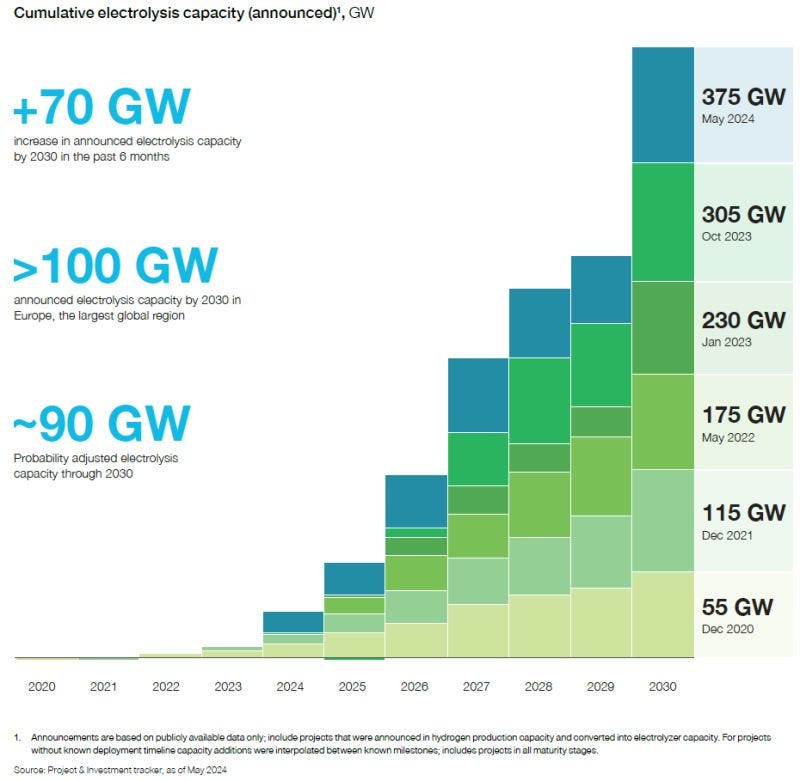

According to the Hydrogen Council's “Hydrogen Insights September 2024” report, a staggering 375 GW of announced electrolyzer capacity is slated to come online globally by 2030, with North America accounting for over 30 GW—up 60% from their previous assessment.

To put this in perspective, if North America's planned 30 GW of electrolyzer capacity is fully realized by 2030, it would equate to around 4.8 Bcf/d of natural gas consumption for power generation (assuming a 90% electrolyzer capacity factor and a heat rate of 7.42). However, reaching that target on time remains a challenge—there’s still a long way to go before hydrogen becomes the dominant energy carrier. Yet, the data shows a clear upward trend across the hydrogen value chain.

Despite this rapid growth, we remain far below the levels needed to achieve “net-zero” targets. This means visible electrolyzer power demand could rival that of data centers, and we’re still behind on the hydrogen capacity required to meet broader decarbonization goals. Given that both data centers and hydrogen production facilities prefer running at high capacity factors and demand reliable, large-scale power sources, nuclear energy becomes an attractive option for supporting hydrogen production.

The big question: can hydrogen scale up to meet these ambitious targets? And how will nuclear energy shape its future adoption?

Constellation Energy and Microsoft have just signed a groundbreaking 20-year power purchase agreement, marking Constellation’s largest deal to date. Under this contract, Microsoft will source 835 megawatts of power from restarting the Three Mile Island Unit 1 reactor, fueling its data centers and expanding AI capabilities.

This isn’t an isolated event—earlier this year, Talen Energy Corporation inked a deal with Amazon Web Services (AWS) in Pennsylvania. The agreement centers around the 960-megawatt Cumulus data center campus, powered by Talen's adjacent 2,494-MW Susquehanna Nuclear Plant. And Oracle has made headlines with its bold plan to design a data center requiring over a gigawatt of electricity, fueled by three small modular nuclear reactors. The trend is clear: Big Tech is increasingly looking to nuclear power for its energy-intensive operations.

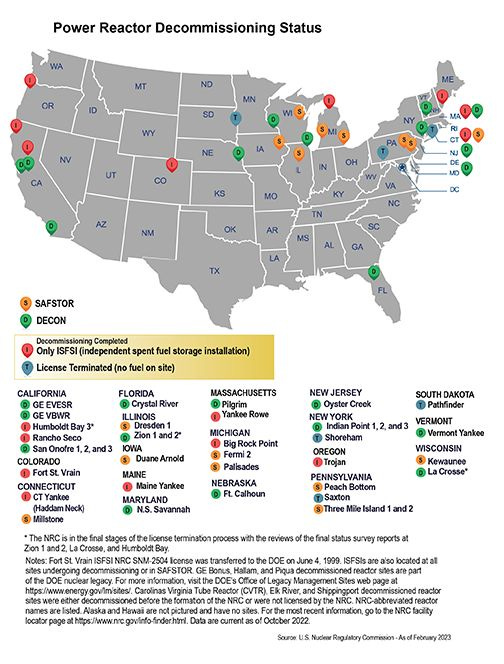

As for Three Mile Island (TMI), its Unit 1 reactor sits next to TMI Unit 2, which infamously shut down in 1979. Unit 2 is currently being decommissioned by Energy Solutions. The map below highlights several U.S. nuclear reactors in various stages of decommissioning, with the TMI site labeled as SAFSTOR, a status that ensures safe storage and future decontamination. Another notable site in SAFSTOR is Holtec International’s Palisades Power Plant, which is also under consideration for a restart.

The question now is whether nuclear power will become the go-to energy source for Big Tech. Can other forms of generation compete with nuclear’s scalability, low carbon footprint, and unmatched reliability? The future of energy may very well hinge on the answer.